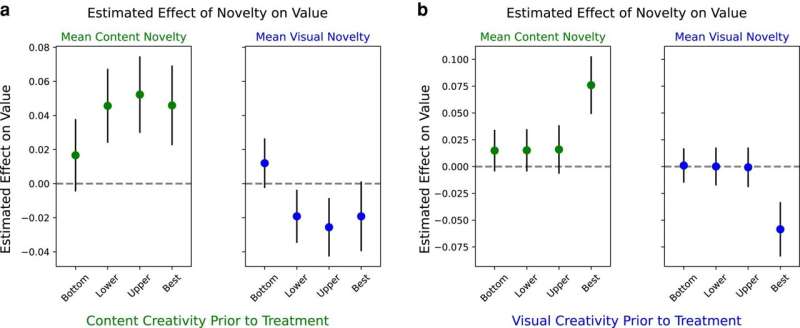

Estimated effect of increases in mean Content and Visual Novelty on Value post-adoption based on a) average Content Novelty quartiles prior to treatment; b) average Visual Novelty quartiles prior to treatment. Each point shows the estimated effect of postadoption novelty increases given creativity levels prior to treatment on Value. Credit: PNAS Nexus (2024). DOI: 10.1093/pnasnexus/pgae052

Text-to-image generative AI systems like Midjourney, Stable Diffusion, and DALL-E can produce images based on text prompts that, had they been produced by humans, would plausibly be judged as "creative." Some artists have argued that these programs are a threat to human creativity. If AI comes to be relied on to produce most new visual works, drawing on what has been done before, creative progress could stagnate.

Eric Zhou and Dokyun "DK" Lee investigated the impact of text-to-image AI tools on human creativity, seeking to understand if these tools would make the human artists less or more creative. The authors examined art made with and without AI on an online art-sharing platform. The study is published in the journal PNAS Nexus.

Artists who adopted AI showed increased productivity, compared to their pre-AI pace, and adopters tended to see an increase in favorable responses to their work on the platform after adopting AI.

While artists who used AI showed decreasing average novelty over time as compared to controls, both in terms of the content of their images as well as pixel-level stylistic elements, peak content novelty among AI adopters is marginally increasing over time, suggesting an expanding creative space but with inefficiencies.

Still, artists of all levels of creative ability are evaluated more favorably after adopting AI if they successfully explore new concepts but are generally penalized for using AI for exploring novel visual styles.

According to the authors, this result hints at the potential complementarity between human ideation and filtering abilities as the core expressions of creativity in a text-to-image workflow, thus giving rise to a phenomenon they term as "generative synesthesia"—the harmony of human idea exploration and AI visual exploitation to discover new creative workflows.

More information: Eric Zhou et al, Generative artificial intelligence, human creativity, and art, PNAS Nexus (2024). DOI: 10.1093/pnasnexus/pgae052

Journal information: PNAS Nexus

Provided by PNAS Nexus