This article has been reviewed according to Science X's editorial process and policies. Editors have highlighted the following attributes while ensuring the content's credibility:

fact-checked

trusted source

proofread

Deep learning-designed diffractive processor computes hundreds of transformations in parallel

In today's digital age, computational tasks have become increasingly complex. This, in turn, has led to an exponential growth in the power consumed by digital computers. Thus, it is necessary to develop hardware resources that can perform large-scale computing in a fast and energy-efficient way.

In this regard, optical computers, which use light instead of electricity to perform computations, are promising. They can potentially provide lower latency and reduced power consumption, benefiting from the parallelism that optical systems have. As a result, researchers have explored various optical computing designs.

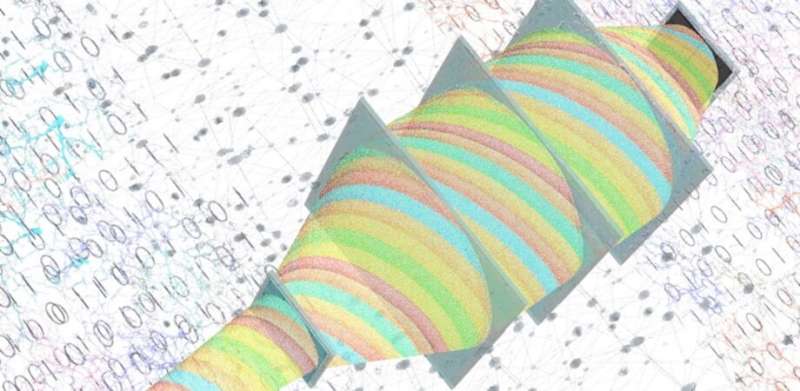

For instance, a diffractive optical network is designed through the combination of optics and deep learning to optically perform complex computational tasks such as image classification and reconstruction. It comprises a stack of structured diffractive layers, each having thousands of diffractive features/neurons. These passive layers are used to control light-matter interactions to modulate the input light and produce the desired output. Researchers train the diffractive network by optimizing the profile of these layers using deep learning tools. After the fabrication of the resulting design, this framework acts as a standalone optical processing module that only requires an input illumination source to be powered.

So far, researchers have successfully designed monochromatic (single wavelength illumination) diffractive networks for implementing a single linear transformation (matrix multiplication) operation. But is it possible to implement many more linear transformations simultaneously? The same UCLA research group that first introduced the diffractive optical networks has recently addressed this question. In a recent study published in Advanced Photonics, they employed a wavelength multiplexing scheme in a diffractive optical network and showed the feasibility of using a broadband diffractive processor to perform massively parallel linear transformation operations.

UCLA Chancellor's Professor Aydogan Ozcan, the leader of the research group at the Samueli School of Engineering, briefly describes the architecture and principles of this optical processor: "A broadband diffractive optical processor has input and output field-of-views with Ni and No pixels, respectively. They are connected by successive structured diffractive layers, made of passive transmissive materials. A predetermined group of Nw discrete wavelengths encodes the input and output information. Each wavelength is dedicated to a unique target function or complex-valued linear transformation," he explains.

"These target transformations can be specifically assigned for distinct functions such as image classification and segmentation, or they can be dedicated to computing different convolutional filter operations or fully connected layers in a neural network. All these linear transforms or desired functions are executed simultaneously at the speed of light, where each desired function is assigned to a unique wavelength. This allows the broadband optical processor to compute with extreme throughput and parallelism."

The researchers demonstrated that such a wavelength-multiplexed optical processor design can approximate Nw unique linear transformations with a negligible error when its total number of diffractive features N is more than or equal to 2NwNiNo. This conclusion was confirmed for Nw > 180 distinct transformations through numerical simulations and is valid for materials with different dispersion properties. Moreover, the use of a larger N (3NwNiNo) increased Nw further to around 2000 unique transformations that are all executed optically in parallel.

Regarding prospects of this new computing design, Ozcan says, "Such massively parallel, wavelength-multiplexed diffractive processors will be useful for designing high-throughput intelligent machine vision systems and hyperspectral processors, and could inspire numerous applications across various fields, including biomedical imaging, remote sensing, analytical chemistry, and material science."

More information: Jingxi Li et al, Massively parallel universal linear transformations using a wavelength-multiplexed diffractive optical network, Advanced Photonics (2023). DOI: 10.1117/1.AP.5.1.016003

Provided by SPIE