Researchers demonstrate human cognitive system designed to enable moral tradeoff decisions

Moral dilemmas—balancing one right action against another—are a ubiquitous feature of 21st-century life. However unavoidable, though, they are not unique to our modern age. The challenge of accommodating conflicting needs figured as prominently in the lives of our human ancestors as it does for us today.

Many psychologists and social scientists argue that natural selection has shaped cognitive systems in the human brain for regulating social interactions. But how do we arrive at appropriate judgments, choices and actions when facing a moral dilemma—a situation that activates conflicting intuitions about right and wrong?

An influential view claims that certain dilemmas will always confound us, because our minds cannot reach a resolution by weighing the conflicting moral values against one another. But a new study from UC Santa Barbara and the Universidad del Desarrollo in Santiago, Chile, demonstrates that we humans have a nonconscious cognitive system that does exactly that.

A team of researchers that includes Leda Cosmides at UC Santa Barbara has found the first evidence of a system well designed for making tradeoffs between competing moral values. The team's findings are published in the Proceedings of the National Academy of Science.

As members of a cooperative, group-living species, humans routinely face situations in which fully satisfying all their multiple responsibilities is literally impossible. A typical adult, for example, can have innumerable obligations: to children, elderly parents, a partner or spouse, friends, allies and community members. "In many of these situations, satisfying each duty partially—a compromise judgment—would have promoted fitness better than neglecting one duty entirely to fully satisfy the other," said Cosmides, a professor of psychology and co-director of UC Santa Barbara's Center for Evolutionary Psychology. "The ability to make intuitive judgments that strike a balance between conflicting moral duties may, therefore, have been favored by selection."

According to Ricardo Guzmán, a professor of behavioral economics at the Center for Research on Social Complexity at the Universidad del Desarrollo and the paper's lead author, the function of this moral tradeoff system is to weigh competing ethical considerations and compute which of the available options for resolving the dilemma is most morally "right." Guided by evolutionary considerations and an analysis of analogous tradeoff decisions from rational choice theory, the researchers developed and tested a model of how a system engineered to execute this function should work.

According to the research team, which also includes María Teresa Barbato of the Universidad del Desarrollo, and Daniel Sznycer of the University of Montreal and the Oklahoma Center for Evolutionary Analysis at the University of Oklahoma, their new cognitive model makes unique, falsifiable predictions never previously tested, and which contradict predictions of an influential dual process model of moral judgment. According to that model, sacrificial dilemma—ones in which people must be harmed to maximize the number of lives saved—create an irreconcilable tug-of-war between emotions and reasoning. Emotions issue an internal command—do not harm—that is in conflict with the conclusion reached by reasoning (that it's necessary to sacrifice some lives in order to save the most lives). The command is "non-negotiable," so striking a balance between these competing moral values will be impossible.

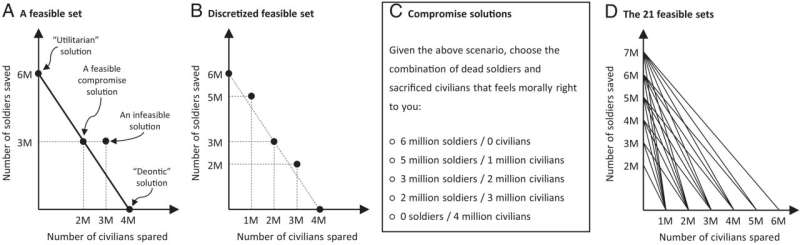

But the researchers' model predicts the opposite: They proposed a system capable of making tradeoffs like this, and doing so in an optimal way. "As in previous research, we used a sacrificial moral dilemma," Guzmán explained, "but in contrast to past research, the menu of options for resolving this dilemma included compromise solutions. To test several key predictions, we adapted a rigorous method from rational choice theory to study moral judgment." Rational choice theory is used in economics to model how people make tradeoffs between scarce goods. It posits that people choose the best option available to them, given their preferences for those goods.

"Our method assesses whether a set of judgments respects the Generalized Axiom of Revealed Preferences (GARP)—a demanding standard of rationality," Guzmán continued. "GARP-respecting choices allow for stronger inferences than other tests of moral judgment. When intuitive moral judgments respect GARP, the best explanation is that they were produced by a cognitive system that operates by constructing—and maximizing—a rightness function."

A rightness function represents one's personal preferences, Cosmides noted. "In other words, how your mind weighs competing moral goods," she said.

The research data collected from more than 1,700 subjects showed that people are fully capable of making moral tradeoffs while satisfying this strict standard of rationality. Subjects were presented with a sacrificial dilemma—similar to those faced by U.S. President Harry Truman and British Prime Minister Winston Churchill during World War II—and asked which option for resolving it felt most morally right. If bombing cities would end the war sooner and ultimately result in fewer deaths overall—and each civilian sacrificed would spare the lives of a larger number of soldiers (hence the term "sacrificial dilemma")—would inflicting harm on innocent bystanders in order to save more lives in total feel like a morally right action? And if so, how many civilians, to save how many more lives?

Each subject answered for 21 different scenarios, which varied the human cost of saving lives. A large majority of subjects felt that compromise solutions were most morally right for some of these scenarios: They chose options that inflict harm on some—but not all—innocent bystanders to save more—but not the most—lives. These compromise judgments are tradeoffs—they strike a balance between a duty to avoid inflicting deadly harm and a duty to save lives.

As predicted, the judgments people made responded to changing costs, while maximizing rightness: The individual-level data showed that the moral judgments of most subjects were rational—they respected GARP. Yet these were intuitive judgments: Deliberative reasoning cannot explain compromise judgments that respect GARP.

"This," Cosmides said, "is the empirical signature of a cognitive system that operates by constructing—and maximizing—a rightness function. People consistently chose the options they felt were most right, given how they weighed the lives of civilians and soldiers."

Compromise moral judgments do not represent a failure in the pursuit of one right action or another, she continued. Rather they show the mind striking a balance between myriad obligations, and deftly managing an inescapable quality of the human condition.

More information: Guzmán, Ricardo Andrés, A moral trade-off system produces intuitive judgments that are rational and coherent and strike a balance between conflicting moral values, Proceedings of the National Academy of Sciences (2022). DOI: 10.1073/pnas.2214005119. doi.org/10.1073/pnas.2214005119

Journal information: Proceedings of the National Academy of Sciences

Provided by University of California - Santa Barbara